How to find Missing Nx Target Inputs

In order to use the Nx cache, Nx defines target inputs that are hashed to determine cache hits. However, these inputs can be misconfigured or incomplete and lead to wrong cache hits - which is a serious problem for reliable builds. In this post, we explore how to use eBPF to trace file accesses during target execution to identify missing inputs.

Nx Target Inputs and Caching

Nx is a build system that speeds up CI/CD pipelines through caching tasks. When you run a target (synonymous for task) (like build, test, or lint), Nx computes a hash based on the target’s inputs - the files and configuration that affect its output. Each target should be idiomatic - meaning if the inputs are the same, the outputs should also be the same and the task can be skipped/pulled from cache. If the hash of all inputs for a target matches a previous execution (meaning the same hash is stored in the Nx cache), Nx restores the cached output instead of re-running the task. This dramatically reduces build times in monorepos and is the main advantage of using Nx. Inputs are defined in the nx.json file under targetDefaults or directly in a project.json where a target overrides the targetDefaults, where you specify which files should invalidate the cache using glob patterns:

{

"targetDefaults": {

"build": {

"inputs": [

"{projectRoot}/**/*",

"!{projectRoot}/**/*.spec.ts",

"{workspaceRoot}/global-config.json",

"production"

]

},

"test": {

"inputs": [

"{projectRoot}/src/**/*.ts",

"{projectRoot}/tsconfig.spec.json",

"default"

]

}

}

}In this example, the build target includes all files in the project root (except test files), a global configuration file, and the “production” named input. The test target includes source files and the TypeScript test configuration. Nx hashes these matched files to create a unique cache key - if any input file changes, the hash changes, and the cache is invalidated.

The Problem of Missing Inputs

Although the glob patterns for inputs are flexible and catch many scenarios, these Nx inputs can still be misconfigured or incomplete. This is a serious problem as it can lead to wrong cache hits - meaning Nx thinks the target can be skipped and pulls from cache, but in reality, the output would be different if the target were re-executed. This can lead to:

- Stale builds that don’t reflect the latest code changes

- Inconsistent test results

- Deployment of outdated artifacts

To ensure reliable builds, it’s crucial to validate that all necessary files are included in the target inputs. However, there is no built-in way in Nx to verify that the defined inputs are complete and correct. Whereas other build engines like BuildXL or Bazel have sandboxing mechanisms that restrict file access during target execution, Nx does not enforce such restrictions. This means a target can read files outside of its defined inputs without any indication, leading to potential cache inconsistencies. You would not even know…

Think this is just a theoretical problem? In practice, I have seen multiple cases in large Nx monorepos where targets accessed files outside their defined inputs, leading to wrong cache hits and unreliable builds. Here are two real-world examples:

- Misconfigured lint target, missing child project source files as inputs

- Build target with a custom Nx executor missing the executor source files as inputs

1. Misconfigured Lint Target

Usually lint target inputs would be defined as:

"lint": {

"inputs": [

"{projectRoot}/**/*.ts",

"{projectRoot}/.eslintrc.json",

"{workspaceRoot}/.eslintrc.json",

"default"

]

}This might work for some projects, but as soon as you add type-aware lint rules, or the @nx/enforce-module-boundaries rule, the lint inputs are incomplete. Why? Because, currently, the lint target only includes source files from the current project. However, type-aware linting and module boundary checks require access to source files from other projects in the monorepo to validate imports and types correctly. If those other project files are not included in the lint target inputs, Nx might pull a cached lint result that doesn’t reflect the current state of the codebase, leading to missed linting errors.

In order to fix this, you would need to update the lint target inputs to include source files from all relevant projects, for example:

"namedInputs": {

"lintProject": [

"{projectRoot}/**/*.ts",

"{projectRoot}/.eslintrc.json",

]

},

"targetDefaults": {

"lint": {

"inputs": [

"lintProject",

"^lintProject",

"{workspaceRoot}/.eslintrc.json",

"default"

]

}

}Here, we define a named input lintProject for the current project’s source files and ESLint config, and then use ^lintProject to include the same inputs from all dependent projects. This ensures that the lint target has access to all necessary files for accurate linting.

This is easily overlooked, especially in large monorepos as Nx does not provide any feedback or warnings about missing inputs. Developers might assume that the default lint inputs are sufficient, leading to unreliable linting results.

2. Custom Build Executor Missing Source Files

In another case, a custom build executor was implemented for a project. The build target inputs were defined as:

"build": {

"executor": "./tools/executors/custom-build:build",

"inputs": [

"{projectRoot}/**/*",

"{workspaceRoot}/global-config.json",

"production"

]

}In this scenarios, the build target uses a custom Nx executor located in ./tools/executors/custom-build. Custom executors are part of Nx plugins and can run targets. However, the inputs for the build target did not include the source files of the custom executor itself. During execution, the custom executor accessed its own source files to perform the build logic. Since these executor source files were not part of the defined inputs, Nx could not account for changes in the executor code when computing the cache key. This led to situations where changes to the custom executor did not invalidate the build cache, resulting in stale builds that did not reflect updates to the build logic.

In order to fix this, the build target inputs should have included the custom executor source files, for example:

```json

"build": {

"executor": "./tools/executors/custom-build:build",

"inputs": [

"{workspaceRoot}/tools/executors/custom-build/**/*",

"{projectRoot}/**/*",

"{workspaceRoot}/global-config.json",

"production"

]

}Again, this leads to unreliable builds and is hard to detect without proper tracing of file accesses during target execution.

No Built-in Validation in Nx

Sadly, Nx does not support sandboxing or tracing of file accesses during target execution. This means there is no built-in way to validate that all necessary files are included in the target inputs. Without such validation, developers must manually ensure that their input definitions are complete and correct, which is error-prone and difficult to maintain in large monorepos.

If you are in favor of having such a feature in Nx, please upvote the following feature request on the Nx GitHub repository: Feature Request: Sandboxing for Nx

In the meantime, in the next section, I will show you how I used eBPF to trace file accesses during an isolated target execution to identify missing inputs.

Tracing File Accesses with eBPF

Disclaimer: The following approach requires Linux with eBPF support and is a bit fuzzy.

The key idea behind Sanboxing in other build systems is to observe which files a target accesses during its execution. If a target reads files that are not part of its defined inputs, those inputs are missing and should be added to ensure reliable caching. To achieve this for Nx, we can leverage eBPF (extended Berkeley Packet Filter) to trace file accesses at the kernel level for the duration of a target execution. eBPF allows us to attach programs to various kernel events, including file system operations, enabling us to monitor which files are opened, read, or written by a specific process. eBPF is also heavily used in Kubernetes environments for observability and security purposes or in BuildXL for sandboxing.

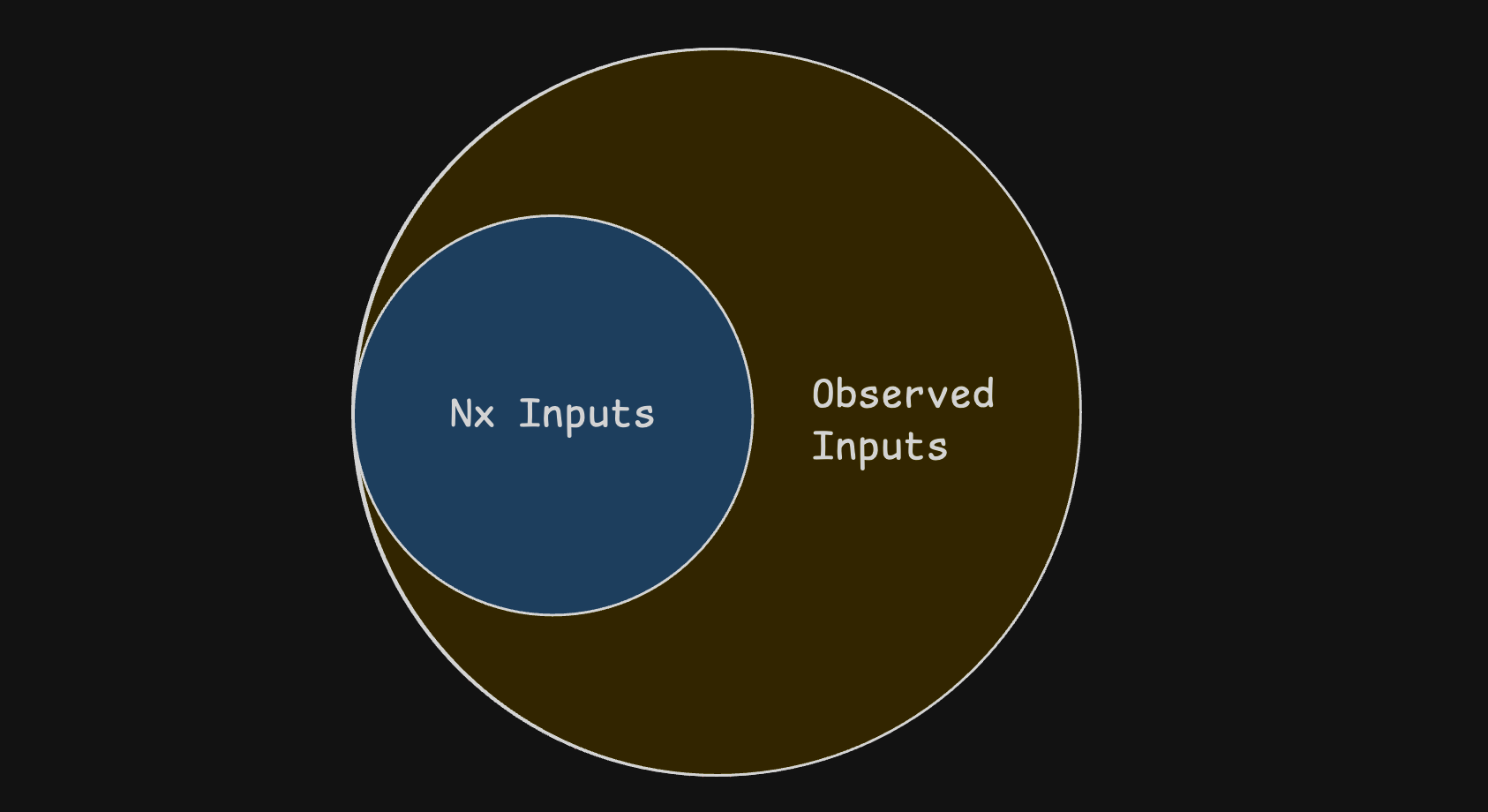

In order to validate the inputs, we simply have to compare the list of traced file accesses against the defined inputs for the target.

1. Getting Nx Inputs using the HashPlan Inspector

Since Nx 22, Nx has an internal API for inspecting the hash plan of a target, which reveals the exact files considered as inputs for the hash of a specific target. We can use this API to programmatically retrieve the list of input files for a target before executing it. Here is an example of how to use the HashPlan Inspector:

const { createProjectGraphAsync } = require('@nx/devkit');

const { HashPlanInspector } = require('nx/src/hasher/hash-plan-inspector');

async function main() {

const graph = await createProjectGraphAsync();

const hashPlanInspector = new HashPlanInspector(graph);

await hashPlanInspector.init();

const task = {

project: 'project-name',

target: 'lint',

};

console.log(

JSON.stringify(hashPlanInspector.inspectTask(task), null, 4)

);

}

main();This will give all Nx inputs of a target, including those from dependencies.

2. Tracing File Accesses with eBPF

To trace file accesses during the execution of an Nx target, we can use an eBPF tracing tool like BCC (BPF Compiler Collection) or bpftrace. Below is an example using bpftrace to monitor file open events for a specific process:

tracepoint:syscalls:sys_enter_openat {

@filename[pid] = str(args->filename);

}

tracepoint:syscalls:sys_exit_openat

/ @filename[pid] != "" / {

@files[pid, args->ret] = @filename[pid];

delete(@filename[pid]);

}

tracepoint:syscalls:sys_enter_read

/ @files[pid, args->fd] != "" / {

printf("read pid=%d file=%s\\n",

pid, @files[pid, args->fd]);

}With both of these pieces in place, we can add some code to run an Nx target in isolation while tracing all file accesses with eBPF. After the target completes, we can compare the traced file accesses against the defined inputs to identify any missing inputs.

Note: This is fuzzy, as the tracing catches file reads from other processes as well (like the shell, nodejs, LSP, VS Code, etc). You have to filter those out manually. So, make sure to run the target multiple times in a clean environment, ideally a container or VM, to minimize noise.

In order to run an Nx target in isolation, you can use the --excludeTaskDependencies and --skipNxCache flags to ensure that only the specified target runs without any dependencies or caching interference.

Full Script

Here is a full example script that combines the above steps to validate Nx target inputs using eBPF tracing:

import { exec, spawn } from 'child_process';

interface TraceOptions {

readonly verbose?: boolean;

}

/**

* Traces file reads during an Nx task execution using eBPF/bpftrace.

* Requires bpftrace to be installed and proper permissions to run sudo.

*

* @param nxTarget - The Nx target to run (e.g., 'lint', 'build')

* @param nxProject - The Nx project name

* @param options - Optional configuration

* @param options.verbose - Enable verbose logging to console

* @returns Promise that resolves to a sorted array of unique file paths that were read during execution

*/

export async function traceNxTargetFileReadsAsync(

nxTarget: string,

nxProject: string,

options: TraceOptions = {}

): Promise<readonly string[]> {

const { verbose = false } = options;

// Filtering out irrelevant files and directories to have less noise

const ignoredDirs = ['node_modules', '.nx', '.angular', '.git', 'tmp'] as const;

const ignoredExtensions = ['.yml', '.yaml', '.log'] as const;

const ignoredFiles = ['project.json', '.gitignore', 'package.json'] as const;

const workspaceRoot = '/workspaces/org/';

let bpfProcess: ReturnType<typeof spawn> | undefined;

const fileReads = new Set<string>();

const initialPids = new Set<number>();

const newPids = new Set<number>();

// Capture PIDs that exist before we start the Nx task

await new Promise<void>((resolve, reject) => {

exec('ps -eo pid', (error, stdout) => {

if (error) {

reject(error);

return;

}

stdout.split('\n')

.slice(1) // Skip header

.forEach(line => {

const pid = parseInt(line.trim(), 10);

if (!isNaN(pid)) {

initialPids.add(pid);

}

});

resolve();

});

});

const bpftracePromise = new Promise<number>((resolve, reject) => {

bpfProcess = spawn('sudo', ['-E', 'bpftrace', '-e', `

tracepoint:syscalls:sys_enter_openat {

@filename[pid] = str(args->filename);

}

tracepoint:syscalls:sys_exit_openat

/ @filename[pid] != "" / {

@files[pid, args->ret] = @filename[pid];

delete(@filename[pid]);

}

tracepoint:syscalls:sys_enter_read

/ @files[pid, args->fd] != "" / {

printf("read pid=%d file=%s\\n",

pid, @files[pid, args->fd]);

}

`]);

bpfProcess.stdout?.on('data', (data: Buffer) => {

const output = data.toString();

const lines = output.split('\n');

lines.forEach((line: string) => {

if (line) {

// Match format: "read pid=<pid> file=<filepath>"

const pidMatch = line.match(/pid=(\d+)/);

if (pidMatch) {

const pid = parseInt(pidMatch[1], 10);

// Skip if this PID existed before we started

if (initialPids.has(pid)) {

return;

}

// Track this as a new PID

if (!newPids.has(pid)) {

newPids.add(pid);

if (verbose) console.log(`[bpftrace] Tracking new PID: ${pid}`);

}

}

let fileMatch = line.match(/file=(.+?)(?:\s|$)/);

// Also match format: "@files[<pid>, <fd>]: <filepath>"

if (!fileMatch) {

const altMatch = line.match(/@files\[\d+,\s*\d+\]:\s*(.+)/);

if (altMatch) {

fileMatch = [altMatch[0], altMatch[1]] as RegExpMatchArray;

}

}

if (fileMatch && pidMatch) {

const pid = parseInt(pidMatch[1], 10);

// Only process files from new PIDs

if (initialPids.has(pid)) {

return;

}

const filePath = fileMatch[1].trim();

// Skip directory paths (ending with /)

if (filePath.endsWith('/')) {

return;

}

const isInWorkspace = filePath.startsWith(workspaceRoot);

const isNotIgnoredDir = !ignoredDirs.some(dir =>

filePath.includes(`/${dir}/`) || filePath.endsWith(`/${dir}`)

);

const isNotIgnoredExt = !ignoredExtensions.some(ext =>

filePath.endsWith(ext)

);

const isNotIgnoredFile = !ignoredFiles.some(file =>

filePath.endsWith(`/${file}`) || filePath.endsWith(file)

);

if (isInWorkspace && isNotIgnoredDir && isNotIgnoredExt && isNotIgnoredFile) {

if (verbose) console.log(`[bpftrace] PID ${pid}: ${filePath}`);

fileReads.add(filePath);

}

}

}

});

});

bpfProcess.stderr?.on('data', (data: Buffer) => {

if (verbose) {

const stderr = data.toString();

if (!stderr.includes('Attaching')) {

console.error(`[bpftrace stderr] ${stderr}`);

}

}

});

bpfProcess.on('exit', (code) => {

resolve(code ?? 0);

});

});

await new Promise(resolve => setTimeout(resolve, 1000));

const nxPromise = new Promise<number>((resolve, reject) => {

const childProcess = exec(

`NX_DAEMON=true NX_CACHE_PROJECT_GRAPH=true pnpm nx run ${nxProject}:${nxTarget} --excludeTaskDependencies --skipNxCache`,

{ encoding: 'utf-8' },

(error) => {

if (error) {

reject(error);

}

}

);

childProcess.on('exit', (code) => {

if (bpfProcess && !bpfProcess.killed) {

bpfProcess.kill('SIGINT');

}

resolve(code ?? 0);

});

});

await nxPromise;

await bpftracePromise;

return Array.from(fileReads)

.sort()

.map(f => f.replace(workspaceRoot, ''));

}Conclusion

Nx’s core premise is to make builds faster by relying on remote caching or tasks and distributed task execution. However, this only works reliably if the target inputs are correctly defined and complete. Missing inputs can lead to wrong cache hits, stale builds, and inconsistent results. Nx does currently not provide any built-in way to validate target inputs, which makes it hard to ensure reliable builds - especially in large complex monorepos.

Personally, I would recommend to not pull from cache in critical environments (like production builds) unless you are 100% sure that your target inputs are complete and correct. Otherwise, you risk deploying stale or incorrect artifacts. However, that defeats the purpose of using Nx to make builds faster - so ideally, Nx would provide better tooling around input validation and sandboxing in the future.

For now, I hope this post provided some insights into the problem of missing Nx target inputs and how to work around it using eBPF tracing. The solution is far from perfect, but it is a starting point to validate and improve the reliability of Nx builds.

Stefan Haas

Senior Software Engineer at Microsoft working on Power BI. Passionate about developer experience, monorepos, and scalable frontend architectures.

Comments

Join the discussion and share your thoughts!